A/B testing is crucial for creating successful mobile games, especially freemium ones. But common mistakes like testing too many variables, stopping tests early, or using poor data samples can lead to unreliable results. Here’s how to avoid these pitfalls:

- Test one variable at a time to identify what works.

- Run tests long enough to reach statistical significance.

- Use large, clean data samples for accurate analysis.

A/B Testing Basics for Mobile Games

Core A/B Testing Concepts

A/B testing, or split testing, is the process of comparing two versions of a game element to see which one performs better. For mobile games, it’s a way for developers to make informed decisions about features, mechanics, and monetization strategies based on data.

Here’s how it works:

- Control group: Players interacting with the original version.

- Test group: Players experiencing the modified version.

- Success metrics: Key measurements like retention rate, average revenue per user (ARPU), or engagement time.

- Statistical significance: Ensuring the results reflect real differences and aren’t just random.

These concepts are the foundation for testing in mobile games, especially when dealing with freemium models.

A/B Testing in Freemium Games

Freemium games need to strike a balance between keeping players happy and generating revenue. A/B testing plays a big role in fine-tuning these elements by analyzing how players respond to changes.

Common areas to test in freemium games include:

- Monetization: Testing in-app purchase pricing, bundle offers, and special promotions.

- Player progression: Adjusting level difficulty, rewards, and resource distribution.

- User interface: Improving store layouts, button placement, and visual cues.

- Game mechanics: Tweaking tutorials, gameplay flow, and social features.

Mobile Game Testing Requirements

Testing mobile games comes with its own set of challenges, like platform restrictions and diverse player behavior. To run effective A/B tests, developers should focus on:

- Having a large enough sample size to ensure reliable results.

- Running tests long enough to reflect weekly player habits.

- Following platform-specific rules for iOS and Android.

- Using real-time analytics to make quick, informed decisions.

Testing strategies may vary depending on the game’s genre and revenue model. For freemium games, it’s important to track both quantitative data (like revenue and retention) and qualitative feedback (like player comments and support tickets) to identify areas for improvement.

Top A/B Testing Errors

Testing Multiple Changes at Once

Making several changes at the same time – like tweaking the difficulty curve and adjusting in-app purchase prices – makes it impossible to know which change influenced player behavior. To get clear, actionable results, focus on testing one variable at a time. This way, you can pinpoint exactly what’s driving the outcomes.

Stopping Tests Too Early

Cutting a test short because of time constraints can lead to incomplete or misleading results. Without enough data, you can’t be sure your findings are statistically sound. Let tests run their full course to ensure you’re making decisions based on solid evidence.

Poor Data Sample Management

Working with small test groups or messy data can lead to unreliable conclusions. Make sure your test groups are large enough and rely on robust statistical tools to analyze the results. Clean, well-organized data is the key to accurate testing.

| Testing Aspect | Common Mistake | Best Practice |

|---|---|---|

| Changes | Testing multiple variables simultaneously | Test one variable at a time |

| Duration | Ending tests too early | Allow tests to reach statistical significance |

| Sample Size | Using insufficient data | Use large test groups and proper statistical tools |

It’s clear that isolating variables, running tests for the right duration, and managing data properly are essential for meaningful results. Next, we’ll explore solutions to these common testing challenges.

How to Fix Testing Problems

Create a Test Plan

A well-thought-out test plan is key to identifying features that drive revenue and improve engagement. It should outline test variables, metrics, and how long the test will run.

Key elements of a test plan include:

- Clear test parameters

- Metrics to measure success (e.g., retention, monetization, engagement)

- Test duration based on player volume

- Methods for collecting and analyzing data

Set Clear Test Goals

Define objectives that are easy to measure and track. Focus on performance metrics such as:

- Target increases in daily active users (DAUs)

- Longer average session durations

- Higher conversion rates for in-app purchases

- Improved player retention over specific timeframes

Use Data Analysis Tools

Choose analytics tools that can handle large amounts of test data effectively. Look for tools offering features like:

| Feature | Purpose | Benefit |

|---|---|---|

| Statistical Significance | Confirms test results are reliable | Ensures decisions are based on sound data |

| Segmentation | Highlights patterns in player behavior | Helps focus on targeted improvements |

| Real-time Monitoring | Tracks progress as it happens | Allows quick adjustments if needed |

| Visualization Tools | Simplifies complex data | Makes it easier to share findings |

These tools help you interpret test results and organize your test groups for accurate comparisons.

Organize Test Groups

To get reliable results, you need well-structured player segments. Here’s how to do it:

- Keep control and test groups completely separate

- Make sure groups are large enough to produce statistically valid results

- Match groups based on shared demographics and behaviors

- Prevent overlap between groups to avoid skewed data

Learn from Results

Fixing earlier testing problems means using results to improve future tests. Take what you’ve learned and apply it systematically.

Steps to build on results:

- Record changes and monitor their long-term effects

- Use insights to guide new feature development

- Set up a feedback loop to keep refining your process

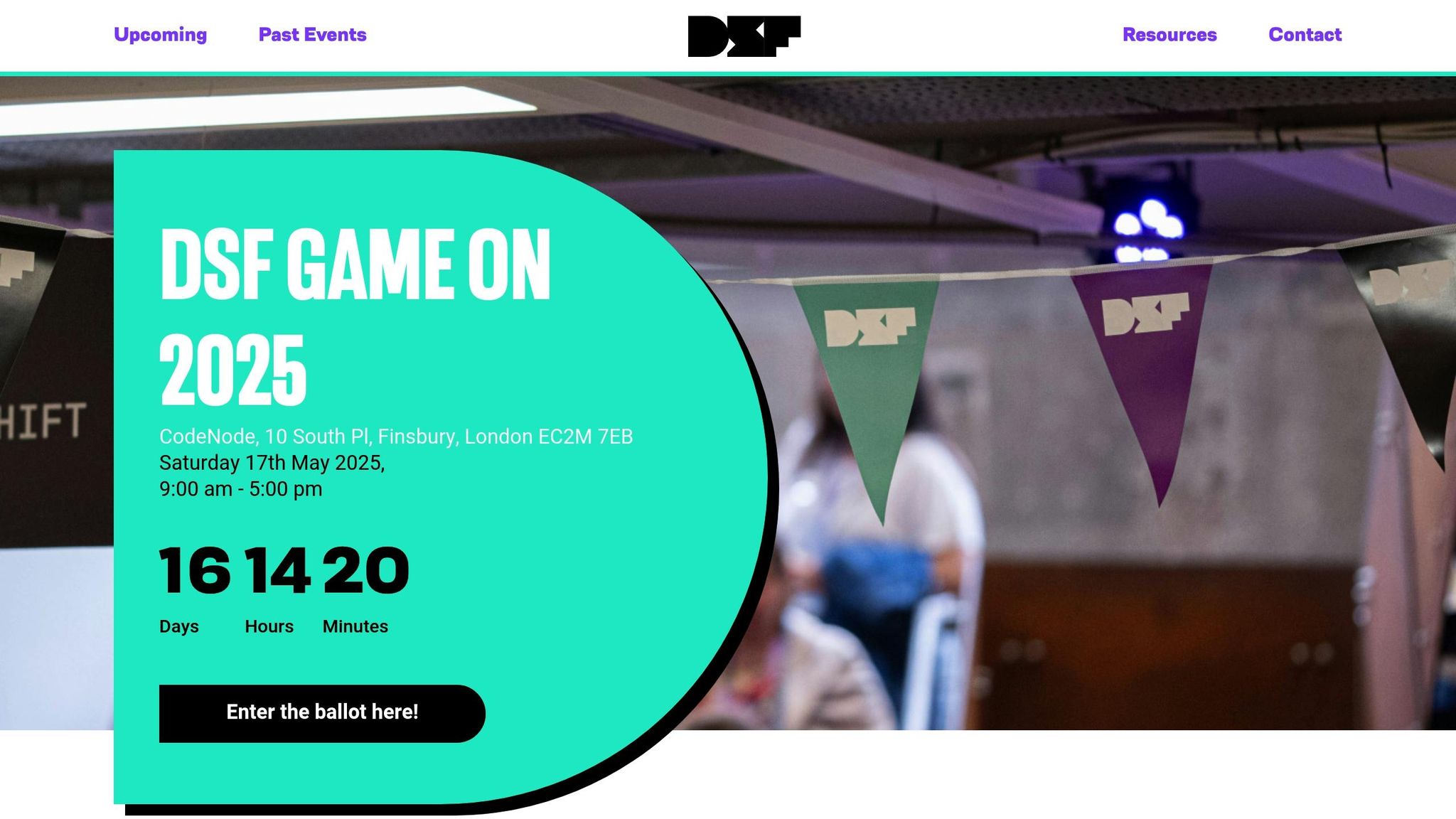

AB testing in mobile games – Data Science Festival

sbb-itb-fd4a1f6

Testing Mistakes and Solutions Guide

Avoiding common A/B testing errors is crucial for improving your mobile game’s performance. This guide highlights typical mistakes and offers practical ways to refine your testing approach.

Quick Reference Table

| Testing Mistake | Impact | Solution | Implementation Tips |

|---|---|---|---|

| Undefined Test Goals | Resources wasted, unclear results | Set clear, measurable objectives | Align objectives with your key performance indicators (KPIs) |

| Over-focusing on Metrics | Overlooks qualitative insights | Balance data with user feedback | Combine user feedback with quantitative analysis |

These insights help ensure your tests stay focused and deliver actionable results.

Key Factors for Effective Testing

-

Sample Size Calculation

Make sure your test groups are large enough to produce statistically meaningful results. Use your daily active users and desired confidence level to calculate the required sample size. -

Test Isolation

Keep tests separate to avoid overlapping effects that could skew results. -

Data Collection

Track primary metrics, document all test variations, and record any technical issues or anomalies. -

Results Analysis

Evaluate results for statistical significance and their impact on key indicators. Look at both short-term outcomes and how they might influence player behavior over time.

Professional Testing Help

When A/B testing becomes more complex, turning to experts with specialized knowledge and proven methods can fine-tune your testing strategies. Here’s how professional services can elevate both game design and testing processes.

Mobile Game Design Services

Adrian Crook & Associates offers expertise in freemium mobile game design and product management, providing frameworks that enhance both revenue and player engagement. Their services include:

- Game Economy Modeling: Analyze and adjust monetization strategies while keeping players satisfied.

- Live Operations Optimization: Use data-driven insights to improve ongoing game performance.

- Feature Identification: Pinpoint and prioritize key elements to maximize the impact of testing.

These design services, combined with tailored testing frameworks, ensure more precise and effective testing outcomes.

Custom Testing Solutions

Custom consulting addresses specific testing roadblocks with targeted solutions. Examples include:

| Testing Challenge | Professional Solution | Business Impact |

|---|---|---|

| Unclear Test Goals | KPI Analysis & Strategic Planning | Sharper focus on business-aligned testing objectives |

| Data Interpretation | Expert Analysis & Actionable Insights | Clearer understanding of results and next steps |

| Test Design | Custom Testing Frameworks | Reliable experiments with statistically sound results |

"I’ve known Adrian since 2008, when he was one of a select group of people ahead of the curve in terms of understanding how mobile, social and free-to-play – at the time, considered ’emerging trends’ for games – would impact the games space. I’m excited to see this experience still serves his work. I’ve learned a lot from him, and he’s friendly, supportive and a pleasure to work with." – Leigh Alexander [1]

With a track record of over 300 successful client engagements since 2008 [1], professional consultants bring the expertise needed to tackle testing challenges. Their experience helps developers avoid costly mistakes and achieve more reliable results from A/B testing efforts.

Conclusion

A/B testing plays a crucial role in boosting both player engagement and revenue in freemium mobile games. These findings build on earlier discussions about testing challenges and practical solutions.

"Working with AC&A allowed us to clarify key issues with our game design while we were still early enough in the development process to make changes. Because of the specific recommendations we received from Jordan, our game is both more fun for players and able to monetize those players more effectively." – Richard Barnwell, CEO [1]

By applying proven methods, developers can turn test results into actionable improvements for their games. These refined practices align with the strategies highlighted earlier:

- Focus on refining core gameplay loops to enhance engagement and spending

- Make decisions based on clear, data-backed insights

- Address potential issues early in development

- Improve monetization strategies while maintaining a positive player experience

With expert guidance, testing becomes more efficient and helps ensure business success. Using well-structured testing methods allows developers to create games that achieve business goals while providing experiences that keep players coming back.

FAQs

How can I determine the right sample size for A/B testing in mobile games?

Determining the right sample size for A/B testing in mobile games is crucial to ensure reliable results. A good rule of thumb is to consider the size of your player base, the expected difference in performance between variations (effect size), and the statistical significance level you aim to achieve.

Start by estimating how many players you need to detect meaningful changes in key metrics, such as retention or revenue per user. Tools like statistical sample size calculators can help, but always account for variability in player behavior. Testing with too small a sample may lead to inconclusive results, while overly large samples can waste time and resources.

For mobile games, it’s often best to segment players based on similar behaviors or demographics to ensure more accurate insights. This approach helps tailor your game improvements to specific player groups, enhancing personalization and overall engagement.

How can mobile game developers ensure their A/B test results are accurate and not influenced by random chance?

To ensure your A/B test results are statistically significant and reliable, start by determining an appropriate sample size before running the test. This helps avoid skewed results caused by too small or incomplete data sets. Use statistical tools or calculators to define this based on your game’s metrics.

Additionally, run the test for a sufficient duration to account for player behavior variations across different days or times. Avoid stopping the test early, even if initial results seem clear. Finally, calculate the p-value or confidence interval after the test to confirm whether the observed differences are meaningful or just due to random fluctuations. Aim for a confidence level of at least 95% to ensure robust results.

How can A/B testing help balance monetization and player satisfaction in freemium mobile games?

A/B testing is a powerful tool for finding the right balance between monetization and player satisfaction in freemium mobile games. By testing different variations of in-game features, pricing models, or reward systems, developers can identify what resonates best with their audience while optimizing revenue streams.

To achieve this balance, focus on player-centric experiments. For example, test different price points for in-app purchases to ensure affordability without compromising profitability. Similarly, experiment with reward frequencies or ad placements to maintain engagement without frustrating players. Always use data-driven insights from your tests to make informed decisions and iterate on your designs.